PART-1: How to Upload Data (Test cases) from EXCEL sheet to HP QUICK Center 11.0

Solution: To upload the data successfully from Excel sheet to HP QC, please follow the below mentioned steps,

2. Click on Add-Ins link displayed on above page and then click on “HP Quality Center Connectivity link displayed on new window (see below)

3. After clicking on above mentioned link, new window pops up

4. Click on “Download Add-in link. Download and install HP Quality center Connectivity Add-in.

5. Now install MS Excel Add-in by clicking on “More HP ALM Add-ins” present on below link

6. Install MS Excel Add-in by clicking on above link.

7. After installing MS Excel Add-in, Re-open MS Excel if it’s already open otherwise open new Excel with Test data (Test cases) that you want to export to HP QC.

8. Now you can see new Add-in “Export to HP ALM” under Add-Ins tab.

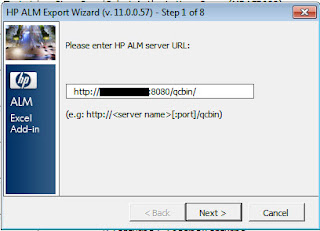

9. Click on “Export to HP ALM” tab, and new HP window pops up (which ask you to enter HPALM server URL).

10. Enter valid user credentials (user id and password) and click on next.

11. Select valid Domain and Project name and click on next

12. Select valid type of data and click on next

13. Type a new map name and click on next

14. Do correct mapping of excel sheet’s column with HP ALM and click on Export

15. System displays success message and you can see test cases should be uploaded to HP QC under Test Plan link.